Who spreads fake news? On Twitter, humans are more likely culprits than bots, new study suggests

False claims spread 'farther, faster, deeper and more broadly than the truth,' MIT researchers find

False news shared on Twitter spreads "significantly farther, faster, deeper and more broadly than the truth," according to a new study from a Twitter-funded research lab which also found that humans, and not bots, are more likely to spread false news.

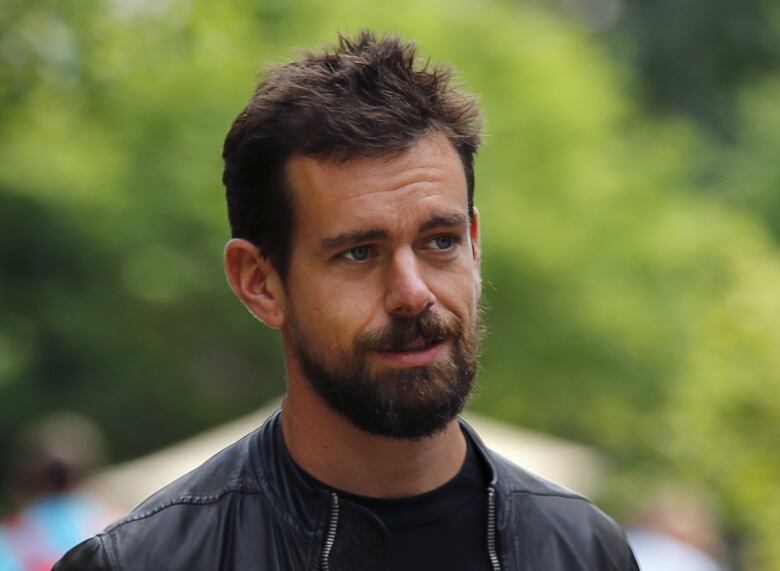

The report comes at a crucial time for Twitter, which chief executive Jack Dorsey has admitted needs to do more to curb abuse, harassment and misuse of its platform.

In recent months, the social network has faced withering criticism from U.S. lawmakers for underestimating the extent of foreign influence on its platform.

In a January submission to Congress, Twitter revised a prior disclosure, saying that more than 50,000 thousand Russian-linked bots and 3,800 human operatives were responsible for tweeting content related to the 2016 U.S. election.

Researchers from the Massachusetts Institute of Technology (MIT) set out to determine how true and false information spread differently across social media, and to what extent human judgment plays a role.

Their findings suggest that Twitter users are more likely to share and amplify false news, because such stories are more novel and therefore shareable than factual stories.

They define novelty as information that "is not only surprising, but also more valuable" for making decisions or portraying one's self as an insider who knows things others don't.

"When you're unconstrained by reality, when you're just making stuff up, it's a lot easier to be novel," said Sinan Aral, one of the study's co-authors.

As for the role of automated internet programs, or bots, the researchers are quick to point out that their findings shouldn't be taken to mean bots don't matter, or don't have an effect.

Rather, "contrary to conventional wisdom," they write, bots accelerated the spread of both false news and true news but did so at about the same rate.

"When you remove them from your analysis, the difference between the spread of false and true news still stands," said Soroush Vosoughi, who also co-authored the study. "So they can't be the sole reason as to why false information seems to be spreading so much faster."

The study was published in the March 9 issue of the scientific journalScience.

'Rumour cascades'

While the spread of false news on social media has always had real world consequences for example,leading to drops in the stock market the 2016 U.S. presidential election has emerged as a watershed example of how far and wide that influence can reach.

To study this effect, researchers looked at around 126,000 tweets, or what they term "rumour cascades," shared by Twitter users from 2006 to 2017, and measured how those tweets spread across the social network.

News was not limited to mainstream sources, but broadly defined as any "asserted claim" containing text, photos, or links to information that had been evaluated by one of six independent fact-checking groups. About three million people retweeted the claims sampled by researchers both true, false, and mixed more than 4.5 million times.

"Whereas the truth rarely diffused to more than 1,000 people, the top one per cent of false-news cascades routinely diffused to between 1,000 and 100,000 people," the paper says.

Unsurprisingly, political content was the most popular, and researchers noted spikes in the spread of false political rumours during both the 2012 and 2016 U.S. presidential elections.

But what might come as a surprise was how the number of followers a person had, or the amount of time they spent on Twitter, wasn't enough on its own to explain the difference in the spread of false news versus accurate news.

"Falsehoods were 70 per cent more likely to be retweeted than the truth," the authors wrote," even when controlling for the account age, activity level and number of followers and followees of the original tweeter."

"The biggest single factor seems to be human nature, human behaviour," said co-author Deb Roy.

More than just 'Russian bots'

The work was a collaboration between researchers at MIT's Media Lab and the school's Laboratory for Social Machines (LSM). The LSM receives funding from Twitter to pursue undirected research, says Roy, who is the LSM's founder, and was also Twitter's chief media scientist until last Fall.

The relationship allowed the MIT researchers something that few academics have: access to Twitter's raw data firehose, a historical archive of every tweet ever made, including those that have been deleted.

Other researchers say that the lack of access to this data not only from Twitter, but other platforms such as Facebook is the biggest impediment to doing more of this kind of work.

"It is really challenging to get access to enough data that is comprehensive enough that we can say things conclusively," says Elizabeth Dubois, an assistant professor at the University of Ottawa who has studied the presence of political bots in Canada.

In the same issue of Science, a group of additional researchers echoed thissentiment in an articleof their own, arguing that social media platforms have "anethical and social responsibility" to contribute what data they can.

"Blaming everything on Russian bots isn't going to serve anyone," said Dubois, "because there are a lot more actors than just Russian bots out there."

_(720p).jpg)

OFFICIAL HD MUSIC VIDEO.jpg)

.jpg)